How the Italian Renaissance can save the smartphone camera

Modern technology clashes with classical art. But they can exist in harmony.

There was something very special going on in 16th century Italy.

The Rinascimento or ‘rebirth’, was a radically hard shift from the Middle Ages to a more modern era of humanity. A period of immense social upheaval and change all throughout Europe, the Black Plague caused major migrations, forcing intermingling of cultures and the development of more major cities. Technologies like the printing press helped spread new ideas faster than anything before it. And a rediscovery of ancient Roman texts led to a focus on the humanities - philosophies based on logic and reason.

But probably the most recognizable aspect of the renaissance is the art. It’s impossible to think about art itself without considering so many of the paintings and sculptures that were produced during that era. Giotto. DaVinci. Michelangelo and Caravaggio. The list of artists who helped fundamentally change the way art is produced goes on for pages and pages. But so much of this rapid elevation of the quality of the art during that period is based on one fundamental concept. Light.

Before the renaissance, much of the art that was being produced was.. impressionistic. Flat. You had hard line drawings with minimal depth. And that isn’t to say there wasn’t light in these paintings. But it wasn’t a key focus. So much of art had global light - filling the entire scene with an even exposure. But it wasn’t until the renaissance that artists started to understand the properties of light, and how it could give form to a painting.

Leonardo’s DaVinci’s around 20 paintings helped define the concept of Sfumato - removing the harsh lines that defined a figure on canvas - instead using the soft transition between colors to help give form to a 2D image. There is a softness to the texture that better emulates how our skin actually looks and curves versus the line drawings that had come before it. Skin is largely soft, and plump, and tonality moves from color to color, in an almost invisible gradient, all based on the source and direction of light.

Similarly, Caravaggio used revolutionarily accurate depictions of light and shadow to bring depth to paintings that traditionally had manually painted foregrounds and backgrounds. It’s this shading that allowed renaissance art to pop off the canvas - to feel like a realistic representation of civic society - not a flat, mythical depiction of this elevated form of humanity.

Maybe Caravaggio’s most famous painting, The Calling of Saint Matthew directs your eye across the canvas using the light from a single window, landing on the most important characters in the story. The light tells a story in itself, but maybe more importantly, it gives form and life to the characters in the painting. Light bounces around the room, diffused softer on the shaded parts of the subjects, and eventually fades into complete darkness. It’s this accurate depiction of the properties of light and shadow, this chiaroscuro that Caravaggio really pioneered. It adds a realism that is at the same time artistic and intentional. Carravagio is painting the light just as much as he paints the figures.

This importance around the control of the properties of light has remained a fundamental part of the art world since the 15th century. Shading and contour helped move animation from a 2D to a 3D space. And the art of photography was born not just through the development of instruments for capturing light, but through the artistic decisions on what to do with the light that was cast.

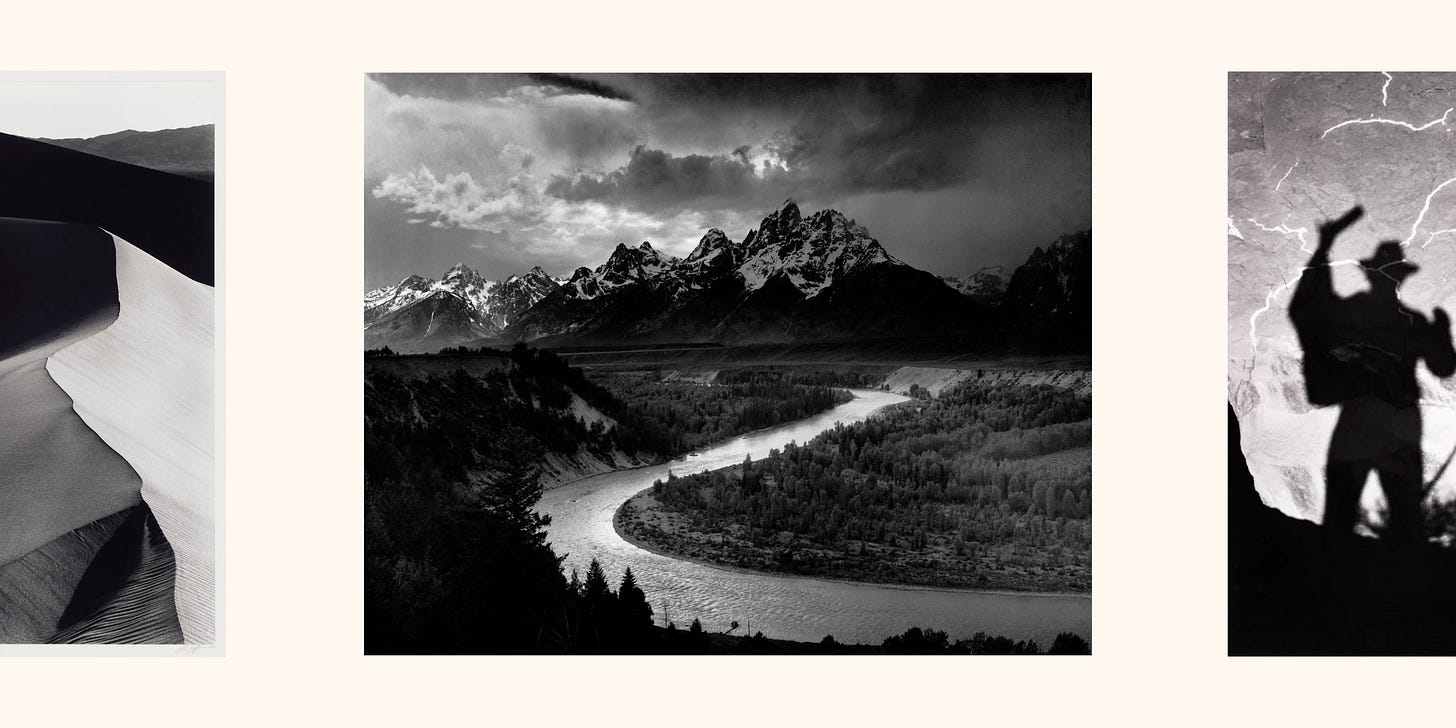

Early photography was founded almost completely in this renaissance concept of chiaroscuro. Especially with Black and White photography, the artist’s control is almost completely based around the tonal range of the photograph. This variation between light and dark is what adds dimension to the image. And through the development process, whether in the dark room or a more modern editing program, the photographer has control of the intensity of those highlights and the deepness of those shadows.

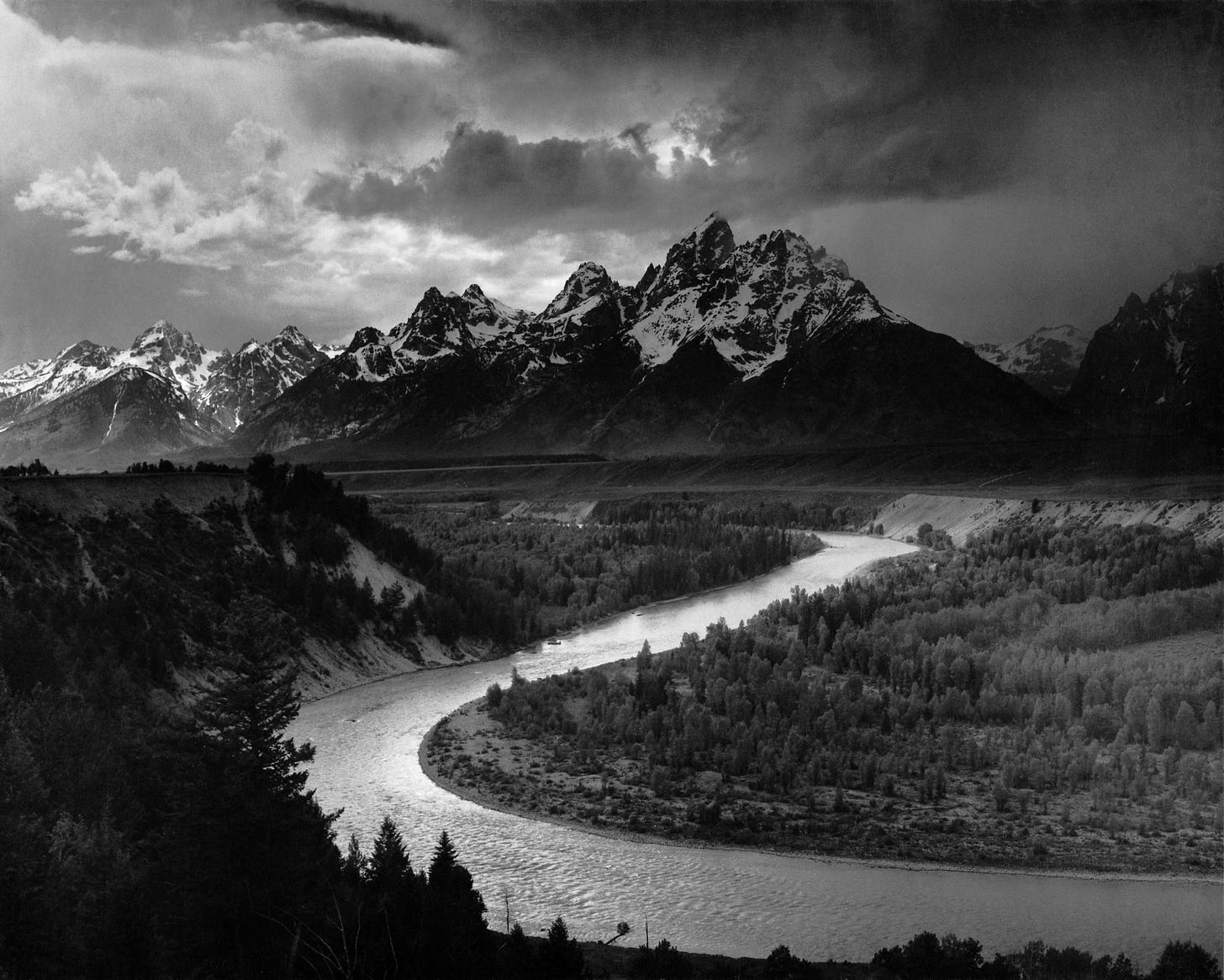

In the early 1900’s, maybe a more modern version of Caravaggio - Ansel Adams knew this possibly better than anyone. I distinctly remember copies of his landscapes hung throughout my house when I was a kid. You could stare at them for hours and notice the distinct shifts in tonality, from bright whites to deep blacks. They showed beauty through shade. They were monochromatic in color, but so colorful in depth. Adams may not have created each individual detail of a scene from nothing like the painters of the Renaissance, but his understanding of the importance of tone in an image brought just as much richness to his work.

And even though Adams’ use of a camera gave him control of depth of field, that shift of focus from subject to background, he chose to think much more like a painter than a photographer. He mostly shot at an Aperture of F/64, a ratio between the hole that allows in light and the focal length of the lens which renders an image almost entirely flat. Everything is in focus.

Part of Adams’ reasoning for doing this is to force your eye to examine the whole image. With no field of focus to guide your gaze, you’re left to dart around the entire photo. You can see the beauty of the flowing river. The flowers blooming in the meadow. And the towering mountains, looming over the rest of the canvas. To Adams, all the nature was important. But without depth of field, those tonal shifts between the highlights and the shadows were everything, the same as they were to early renaissance artists. He had to practice chiaroscuro just as much as Caravaggio did hundreds of years before him.

And even though Adams’ work was made almost a century ago, so much of what he made still looks richer than so much of photography today. It looks modern. Hell it looks better than modern. But a huge reason why his images look so incredible, past his understanding of the properties of light, is that there was a long period of time after Adams made his photos where cameras actually became.. fundamentally worse.

Adams worked with large format photography - these flexible wooden boxes that captured light and projected them onto huge 4x5 and 8x10-inch canvases of film. And even though these cameras look dated by today’s standards, the quality this format can capture is pretty freakin’ incredible. The massive film canvas the lens projects onto inside of that box allows for incredibly minute shifts in tonality and color, centimeter by centimeter. Large format has an incredibly gradual sfumato, and in the case of Adams’ black and white work, a more gradual chiaroscuro. A more granular tonality that creates depth and realism.

But one of the fundamental cornerstones of technology is miniaturization. How do we take a big thing and figure out how to do it.. smaller. Make it more portable. We’ve seen it in radios, computers, music players and data storage. How do we take this thing that adds value to our lives and figure out how to take it with us. In as compact and portable a way as possible. The computer shrunk from a mainframe to a desktop to a laptop, to a smartphone, and hopefully one day, to an ambient system. With no computers in sight at all. It was destined to happen to cameras, too.

Large format became medium format. Medium format became 35mm and even half-frame. But the film canvases we were projecting our images onto also had to shrink. Even if glass were able to project the same effective scene onto a smaller image, the tonal quality we could capture and enlarge diminished, for the sake of portability. With less canvas to project onto, there was physically less sfumato and chiaroscuro available to the artist. Less granular tonality to work with.

Then came digital photography, and eventually mobile phones with cameras built in. But they were so small. Because just like the computer and the camera, the phone was being miniaturized to be as compact as physically possible. The same scene, at one point projected onto a massive 8-inch by 10-inch canvas of film, was now being captured by a digital sensor smaller than your pinky nail.

But hey turns out, humans are pretty addicted to communication. And cell phones allowed for faster communication than pretty much any other method before them. So they became a whole industry. Really, really quickly. And new ones got made every year. So they got better every year. Faster every year. The screens got better and the batteries got better and the chipsets got better. But unfortunately for the cameras, well, we can’t change physics.

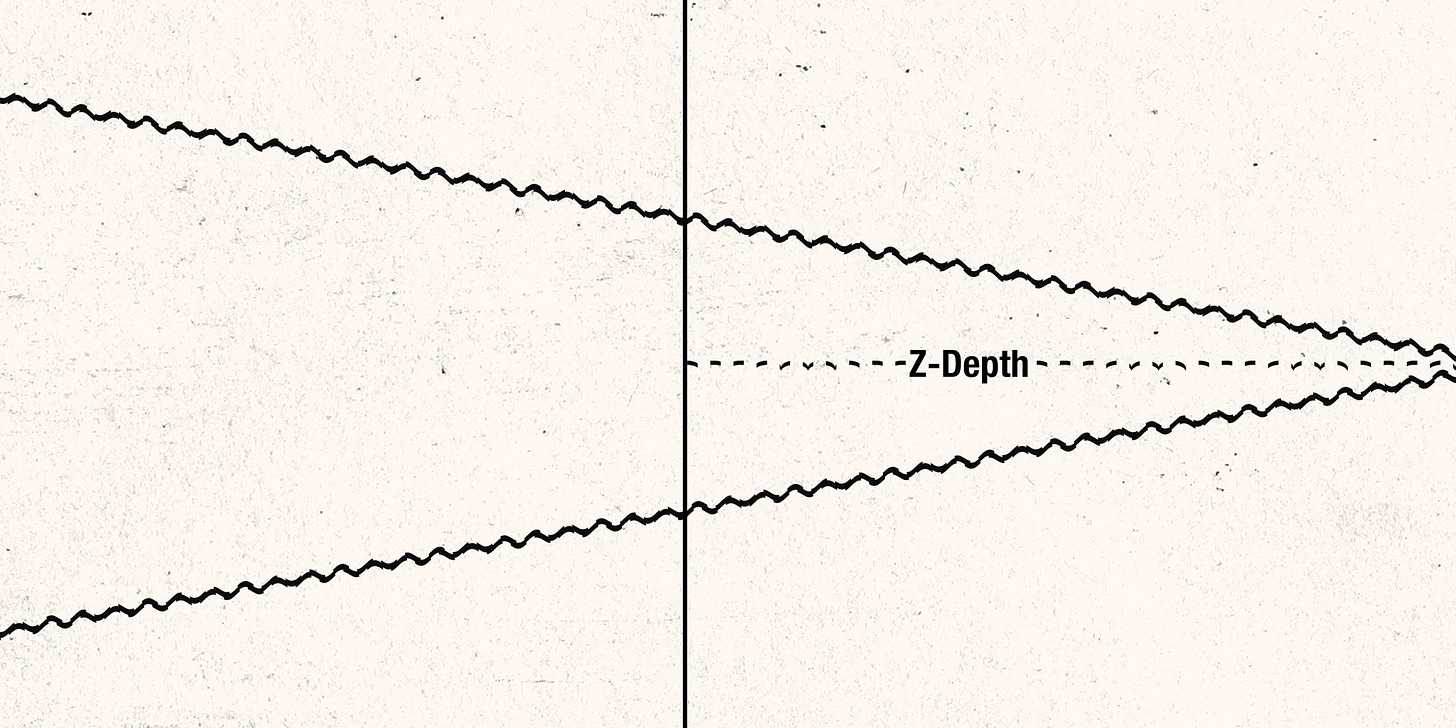

That obsession with the pursuit of miniaturization and thinness stuck around. The smartphone camera sensor wasn’t getting bigger - it was mostly staying the same, if not getting even smaller. And as we tried to make our computers and phones thinner and thinner, it became even harder to cram a bigger image sensor into these devices. Because in order to project a scene over a larger sensor, you need to increase the Z-Depth, or distance between the sensor and the optical center of the lens. Think about those large format cameras again. They were incredibly deep. When you pull your magnifying glass away from the concrete, the circle of light gets bigger. The same is happening in your phone if you want a bigger sensor.

There were some phones that tried to kick this thinness trend in the pursuit of better imaging. The Nokia 808 Pureview from 2012 used a sensor so big, the camera apparatus took up half the phone and stuck out more than maybe any other phone on the market. The size of Nokia’s sensor allowed for either bigger pixels, capable of catching more photons for a brighter, cleaner image, or in the case of the 808 Pureview, more resolution, since you could subdivide the sensor more and still maintain the same photosite size as the competition.

But those devices didn’t catch on, and kept getting beat out by thinner and lighter phones. At the time that was a bigger priority, since social media barely existed and the mobile internet was still pretty new. But as the internet matured the camera started to get more and more important. Suddenly we could capture our world and share it instantly. A pretty perfect pairing for that inherent human need for communication and connectedness.

Since computational speed was advancing faster than physics was changing, engineers needed to find ways to use computers to make photos better, without making phones thicker. They had to cheat physics with chipsets. So they started tweaking the way photos were processed to make them look as good as possible while still using those tiny sensors that fit in these crazy thin devices.

If you want to brighten an image on a tiny sensor that can’t capture a lot of light, you have to amplify the signal with something called electronic gain. You’re digitally brightening those pixels. But all digital photos have noise - these random imperfections in the pixels caused by random interference, electron hopping, whatever. So if you amplify the whole signal you’re also amplifying that noise. If you can saturate those pixels with a lot of light and don’t have to amplify the image as much, you’re gonna see less noise. But these tiny sensors just can’t capture that much light. So there’s a lot of gain goin’ on here.

Then to get rid of that noise, you have to use noise reduction, which uses algorithms that take the average pixel color in an area and apply it to all nearby pixels to minimize those imperfections. But that also fundamentally reduces the sfumato, the granular tonality that gives images contour and depth. So then you’re left with something that looks plasticy and flat.

To try to fix that, phones add sharpening, which adds contrast to edges to try to bring back that sharp look you can get from a good photo. But contrast is also a ratio between the light and dark areas on an image. You’re reducing that tonal granularity even more. There is even less chiaroscuro. The image might be sharp again but it’s even flatter than before.

That’s how we ended up with the “phone photo” look, and why in general, you’ve sort of always been able to tell the difference between a phone photo and a full-fledged camera. But we pretty quickly got to this point where phones were much more advanced than cameras were. Cameras have physics on their side, but phones.. They’ve got technology.

This led to the development of something called computational photography. You might have heard that term before. Technically, just processing an image like the process I talked about before is computational. But engineers pretty quickly figured out that computers can do some pretty insane things to get around the small sensor issue.

Google’s Pixel phones can take pretty great photos of the stars with Astro Mode and see in the dark with Night Sight. They can separate the foreground from the background for a bigger sensor “look” with portrait mode. But one of the most foundational cornerstones of computational photography over the last decade, and probably one of the most important is HDR+.

HDR stands for High Dynamic Range. In photography, it’s the process of capturing as much detail as possible in an image. Without HDR, when we bump the gain in a phone photo like we talked about before, we get a brighter image, but in doing that it’s really easy to blow out that image - to lose the highlight data. With a low amount of dynamic range in high contrast scenarios, you’re either gonna blow out the highlights or lose the shadows.

Around 2016, Google’s then head of computational imaging Marc Levoy came up with a solution, called HDR+. I actually made a video about how this works a few years ago which I’ll link here, but effectively, when your viewfinder is open, it’s constantly taking photos, since it has to show you those images on your screen anyways. So Google decided to take advantage of this. It took a series of super short exposures and stored them in memory, aligned the images, took the average pixel color of every stacked pixel, then upped the gain to get a brighter image. Normally this is where you’d see noise, but since the phone was taking the average color over the same image multiple times and noise is random, this really helped to reduce it.

This feature alone cemented Google as having the best cameras in a phone for a long time. Google could capture great images in really challenging, high dynamic range scenes, and they’d look decent! Your phone was capturing a lot more information, and you could retain detail in the shadows and the highlights.

Now the danger here is, just because you HAVE all that information, doesn’t mean you should always SHOW all of that information. If you retain all the highlight detail and shadow detail, that’s great, but it also produces a flat looking image. Remember that sfumato and chiaroscuro again. It’s the tonal granularity that gives form to an image. If everything is a mid tone and you eliminate highlights and shadows, you end up with something that looks even flatter than the processing your phone is already doing.

Now fortunately, Google’s Marc Levoy knows this. Marc is on record saying he took inspiration from renaissance artists when tuning HDR+, specifically from Carravagio. Images from the first few phones that used the processing and especially the first couple of Pixel phones really showed this off. You got those deeper shadows and lighter highlights, while still retaining data. And that solidified the Pixels in particular as being some of the best phone cameras in the world, for a long time.

But as the years went on, manufacturers formed an obsession with detail. They thought that the more information they showed, the more they could say they had the “best camera”. And it forced the computational processing across the board to skew towards showing ALL the shadows and ALL the highlights. Combined with the general processing we’re already doing to compensate for small sensors, you’re now getting a flat image in almost every way.

Here’s a few photos I took with Marc at the Pixel 3 and Pixel 4 launch event. Now I’ll admit I messed up a bit here because the Pixel 3 reference photo was taken the year before AT the Pixel 3 event, so the lighting is completely different. But if you take a look at the Pixel 1, 2, and 4, you can see a MUCH tighter focus on preserving the highlights, and lifting the shadows, in an almost linear fashion. The Pixel 1 had a great balance of shadow and highlight detail. It was still a little overly sharp - again an artifact of needing to compensate for the noise reduction. But there was color in our skin. There was contour in our faces. But as the devices got newer, the intense focus on saving that shadow and highlight detail started to remove contrast from our face, and made us look more like zombies than humans.

So here’s the thing. So much of the way we process photos on phones, from compensating for small sensors, to computational features like portrait mode and even in some ways HDR, are just a stop gap. They’re a solution for a problem that existed in a very particular moment in time, when consumers were obsessed with how thin, miniature, and portable phones could be. In the years since then, and especially the last few years, that sentiment has changed dramatically.

Just in the last two years, we’ve seen so many companies that are suddenly deciding they don’t care about making super thin devices anymore, specifically because camera quality has become a key selling point for smartphones. Camera bumps have gotten pretty big! And pretty thick. And because of that compromise we can finally fit much bigger sensors in our devices.

Those bigger sensors bring a whole lot more information. Naturally. They have dramatically better natural dynamic range and suddenly, a ton of natural depth of field. Now, you can point a phone at an object, and there’s a good chance it’s going to have a nice, natural, OPTICAL shift between the area in focus and the out of focus area. It makes portrait mode almost pointless, especially because it never got that great in the first place. And of course, we should keep HDR+. But all this natural information we’re suddenly getting from bigger sensors should be utilized. Right now, phones with huge sensors are still processing photos like they’ve got older, smaller ones.

I mean look at this photo I took on the Pixel 5 with a 1/2.55” sensor versus this photo I took on the Pixel 6 with a MUCH larger 1/1.3” sensor. Sure, the Pixel 6 has a little more natural depth of field, but the processing is almost exactly the same.

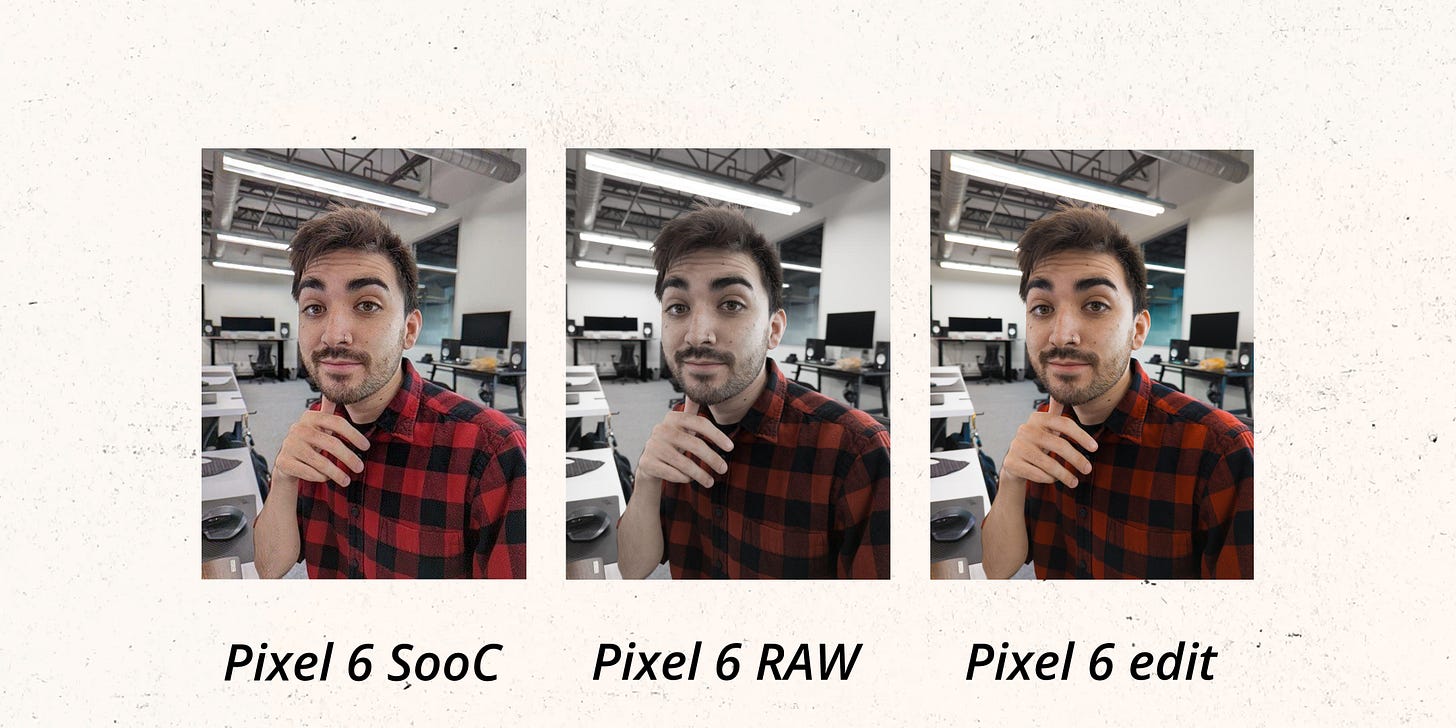

To show you a couple of examples of what’s possible out of these new sensors, here’s a couple of straight out of camera images that I took with the Google Pixel 6, vs the RAW file that I captured alongside it with the RAW + JPG mode. If we just bump the vibrance to bring the color back in, you start to see what’s really possible to get out of this sensor. Remember the RAW is the untouched information the sensor is capturing. What you usually get when you hit the shutter button is a photo after that raw has been processed by the phone.

The processed photo has super oversharpened fine edges to compensate for noise reduction. Every individual hair is way too sharp. The eyes start to break down as you zoom in. And the nice natural halation from the light is sucked away completely. But the edited RAW shows the natural gradient of focus that we’re now getting from these bigger sensors. The shadows under the eyes and tones adding contour in the face were over-HDR’d straight out of camera, which got rid of the natural contour in the face. But the RAW version of this photo brings life back into the image. It looks like it was shot on an actual, full-on camera, with a much bigger sensor. And retains all the natural characteristics that make a big sensor great.

Here’s another image I took with the Pixel 6 in Wetzlar, right as the sun was setting. This looks fine at first glance, but just look at what’s possible when we compare the RAW photo. The shadow in the ground is way too lifted, when it should be darker to help depict the time of day. The highlights should be able to peak just a bit. And maybe the biggest giveaway from this photo is the foliage. Every time you take a phone photo with plants in them, they get oversharpened to hell. There is so much detail in there. It’s very hard to take a foliage photo from a modern smartphone and have it look good.

The RAW on the other hand, has a much more natural look. You can have more contrast between the darks and the lights while still preserving detail. And maybe most obviously in this shot, it’s not oversharpened. The simple fact that this image doesn’t have a bunch of sharpening applied to it makes it look so much better, and you can feel the softness of the foliage much more naturally than the straight out of camera image. The time of day shines through the image so much better. There’s more color because the shadows aren’t lifted out of the trees themselves. It’s just an overall better looking photo, and stays true to the contour pioneered by Da Vinci and Carravagio.

The RAW images really help to show what’s possibly out of these new sensors. If a phone can capture these images and I can edit them to look like this in a few seconds, the phone should be able to do that itself, automatically. Instead, devices are still processing photos like there isn’t enough light being captured. And even though phones still capture far less light than traditional dedicated full frame cameras, we’re at the point where the light captured is almost good enough. It is so, so close.

Now, I just want to note that I’m not saying we should get rid of computational photography. The innovations we’ve made in that field are legitimately amazing. What I am proposing is that we begin to tweak how these algorithms work to help bring what makes art great back into photos, ESPECIALLY now that we’re starting to get these massive sensors. It’s clear that we’re ABLE to, since we can edit the RAWs ourselves and get a great looking photo. But of course, I fully understand that most people won’t want to edit their photos at all - they primarily want to point, and shoot, and be done with it.

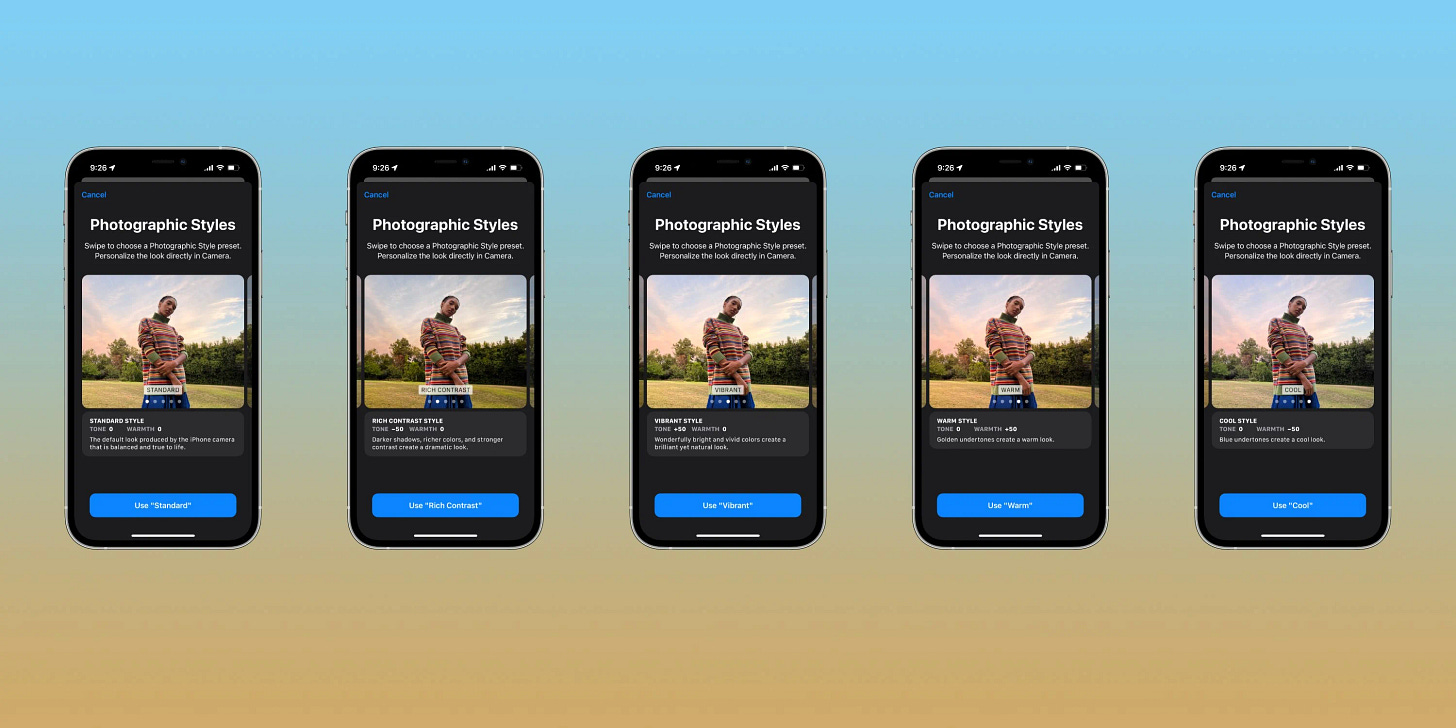

Interestingly, Apple announced something this year with the iPhone 13 that didn’t get as much press as maybe it should have, called Photographic Styles. On the surface, these look like those instagram filters you choose from when posting an image or a story. But instead of a filter laying on top of your photo to change how it looks, it’s completely changing how it processes the photo at the point of capture.

Photographic Styles give you a few tweaks to choose from, and I actually hope that we get some more in the future. Right now Apple gives you control of contrast and warmth, and it couples them with a few different presets that you can choose from if you don’t want to mess with individual settings yourself. But once you select one, or tweak it to your liking, that’s how your camera app will shoot going forward. A super simple, easy way to get a permanently better, more personalized look without the need to edit a RAW file yourself. And it has you select one the first time you open the camera on your new phone.

Also, funny enough, Rich Contrast is possibly the most popular Photographic Style, which adds more contrast and contour to your images. Blacks are more black and less gray, and highlights are brighter. To me, this is how the original Pixel looked before the shadow detail started getting out of control.

But iPhone photos aren’t perfect either. They still have plenty of the processing issues from the past, too. And just in the last year, even though a lot of people didn’t think the iPhone 13 was a big update, the camera sensors got dramatically bigger. That’s a much bigger deal than a lot of people realize. If we can just lean into the natural physics of what we have, we can finally push towards smartphone cameras competing directly with dedicated ones. Of course there is still a huge difference in how shooting styles make you feel and inspire you, but we’re this close to a tipping point where the quality of the image from this and this becomes completely indistinguishable. We’re so, so close.

Anyways y’all, if you’ve stuck around this long, thanks for sticking with me. I’ll catch ya next time I’ve got something to say. See ya.

Amazing content. Thank you so much for sharing your thoughts and experiences.

I think I know why I like looking at my old lumia 925's photos more than hdr photos of my note 9.